OVERVIEW

We had one of our projects’ set up with AWS. The project was instrumented with microservices architecture and hosted with Kubernetes services (EKS) with AWS.

The challenge came across when Security Audit was taken in place during the migration of the project’s infrastructure from AWS to GCP (Google Cloud Projects) because of some challenges we were facing with AWS Kubernetes orchestration. There were multiple changes requested by the Audit team. One of them was a Database security audit for sensitive information.

There were multiple solutions we had analyzed, considering the feedback, available time or other constraints. And decided to proceed with one.

Let’s discuss in a little detail about the challenges, possible solutions and the factors involved during the decision.

CHALLENGE

The Security Audit Team outlined a couple of required security audits. One of them was securing user / service Tokens (Sensitive Data) with databases that were not up to mark to pass the audit.

The microservices were written in different technologies like NodeJS, PHP (lumen), Golang, etc. and maintained by different development teams. Each service followed a different algorithm for data encryption, But was not standardized across the services.

Second, The encryption key rotation was missing. Once the key would define with project setup, it was rarely getting changed.

Third, not that main but the important one. Time constraints, since migration was about to end and the team had limited time to prepare a solution that can be achieved in the given period, the migration process was being really completed.

SOLUTIONS

Considering all the mentioned challenges, there were two solutions that came into consideration …

Vault

The Vault

It supports multiple features like Dynamic Secrets, Key Rotation etc. and helps to fulfill all the requirements for protecting sensitive data. However, the setup / configuration part was a little complicated, and it looked like #the cloudops team needed a good amount of time to set up required services tweaking on server architecture. On the other hand, we had not enough time to complete the transiting phase and share again for the audits.

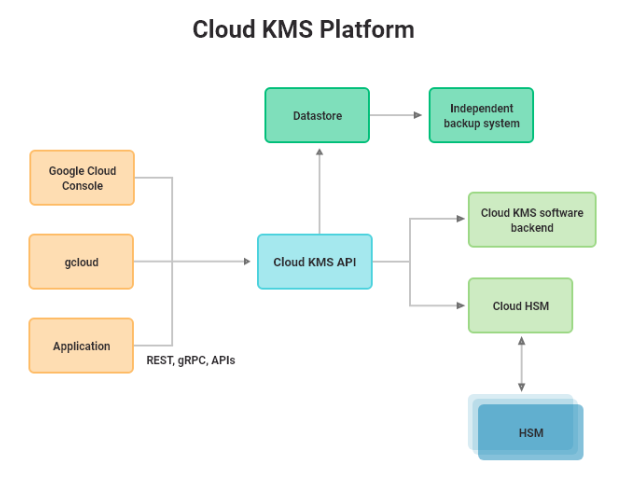

KMS

The Cloud KMS was another solution for consideration that was looking promising to fulfilling each and every aspect of the requirements like:

- Quick start of the development process without worrying about too many updates on server architectural level.

- Key rotation with abstracted environments

- The managed service alignment of the new Cloud structure (as we were moving to GCP)

All these factors were looking good enough to win the decision. Also, the management team was somewhere aligned with them too.

The flow below represents KMS working flow with asynchronous mode.

Time for the action

Now, when the decision was taken, all we had to do was to take it into action. The team started looking for the possible standard ways to implement it efficiently.

The team outlined WHAT initial challenges need to be considered in refinement phase:

- Decrypt and encrypt the existing data without effectively losing the data.

- Implement the features across multiple services. Services instrumented in different technologies, that is another kind of challenge on top of it.

- Prepare effective light-weighted solutions that can be adopted by different teams easily.

Etc…

Implementation phase

Firstly, it was very important to understand the amount of data going to be affected. Also, the list of features going to be impacted because of these changes.

These are some key steps HOW we achieved the solution:

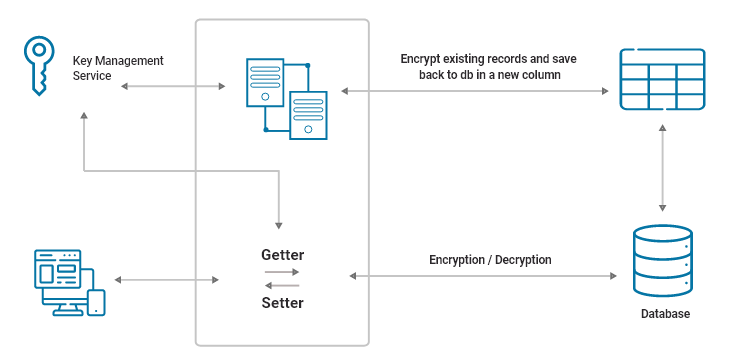

- Created a migration script to add a new column in respective of each existing column that needed to be encrypted. The script was responsible for data encryption and once the process done, It’ll delete the older column from table.

- The new column type was supported to store blob information. As after encryption, it was converted into blobs.

- Created Getter & Setter to add another level of abstraction & transformation layer on actual attribute. If any additional logic would require decrypting / encrypting, the data would help on this level.

- Created documentation for the implementation steps, so other teams could easily follow & implement the solution in the same standard way across multiple tech stacks.

- During the Deployment, we stopped the incoming data requests to endpoints, so it would not receive the incremental data until the deployment completion. Also, executed the migration script first to reform the existing data as per new solutions.

Etc…

One last challenge was just waiting ahead

The solution was implemented and started working as expected, but another challenge was just waiting for us. The requests started taking longer than expected as for the encryption of each value it was sending additional requests to Cloud KMS API. Especially if there were multiple columns in the same table, it was sending multiple requests every time for a single record.

Encapsulate multiple fields in a single one as a JSON field and then encrypt the single JSON field in one go. Since these were the non indexed or non-searchable fields, so we decided to proceed with it.

RESULT

Now, it’s about 3 years since the solution has been delivered for the application and security audits are getting in place annually, but the team has not received any further notification on any issue related to securing sensitive data in the database.