One of our CRM applications allows its users to upload all kinds of documents and media. Being able to upload all these important documents to the cloud and then subsequently access them anywhere has been a productivity boon for its users. However, the system allows the download of one document at a time which worked well for some time, but as a wider spectrum of customers started using the application, a need to be able to download multiple files as a single archive in one go came up.

Objective

Create a solution that allows the user to select and download as many files as they want in a single go without affecting the performance of the application itself. The files should download as a single archive.

Challenges / Possible Solutions

While designing the solution, we had to consider the following requirements.

- It should be a scalable solution that can work equally well for 100 as well as 100,000 daily download requests without any degradation in download performance.

- The number of files should not be a constraint. Users could download 10 files or 100 files and that should not pose a challenge to the solution.

- Ensure that those archives are cleaned up, otherwise, they would consume space & add to the cost forever.

- Files can be big, and thus the download size can be bigger too and can go into 100’s of MB.

- And lastly, it should not, in any way, have any impact on the performance of the other areas of the application

It’s important to understand that the files to be downloaded were stored on S3. Since S3 doesn’t have any processing capabilities, it cannot generate the archive. So the most important question that our solutions had to answer were:

- Archive Generation – What/Who would generate the archive

- Archive Streaming – What/Who would stream the archive to the browser

- Where the archive will be stored ( if at all)

Based on these requirements and questions we came up with the following solutions

Solution 1 – On the fly Archive Generating + Streaming

We would create a new API in the backend application (Laravel) that would take filenames as parameters, download them to the local file system of the server, create an archive on the fly and dispose of the archive as a download in response.

Pros:

- It’s simple to understand and implement.

- Can be quickly implemented as this doesn’t require any extra setup

- We don’t have to worry about auto-deleting generated archives or securing them as archives are not stored in any permanent storage like s3

Cons:

- Reduced Web Servers Performance

- Slow Download Speed

- Would require increasing the Request Timeout of the Web Servers / Load Balancer otherwise Download requests would timeout for larger downloads.

- No Resume Download functionality

- Limited Scalability

Solution 2 – Queued Archive Generation + S3 Streaming

We would create a new API in the backend application (Laravel) that would take filenames as parameters, save the “Download Request” in a table, Push the Actual Task for archive generation to a Queue for background processing and Return an ID as a response immediately without waiting for the actual archive generation to finish.

The browser would keep polling a separate API with the given ID for the Download URL. Download URL would be an S3 pre-signed URL which will be valid only for a short amount of time.

Pros:

- Asynchronous processing of the files & archive creation

- Immediate response back to User – So users can navigate to other parts of the application.

- Web Servers Capabilities & Performance are not hugely impacted as they were in “solution 1” since much since the heavy lifting is moved to the background workers.

- Faster Downloads from S3

Cons:

- If a lot of download request starts coming then this can impact the web server’s capability to handle other core APIs. e.g if one server can handle 1000 requests a minute and we start receiving 500 Download Per minute then that would cut server capacity to half.

- Would require extra scaling at the Background Worker level since workers will be doing most of the heavy lifting.

- Limited Scalability – It can’t be scaled in Isolation. It will scale along with the whole application.

- Would require a cleanup setup for archives that gets stored on s3

Solution 3 – Serverless Archive Generation + S3 Streaming

We will use the lambda function to generate the archive. So we could trigger a lambda function that would receive the file names as arguments, create an archive and save the archive back to s3 from where it could be downloaded.

Similar to Solution 2, The browser would keep polling a separate API with the given ID for the Download URL. Download URL would be an S3 pre-signed URL which will be valid only for a short amount of time.

This had several advantages like it wouldn’t have any impact on the performance of our applications. It is scalable and would work for any number of download requests and last but not least it would be cheap.

Pros:

- Unlimited Scalability

- Faster Generation of archive

- Cost-Effective

- No Impact on other areas of our application

- Faster downloads

Cons:

- Extra Setup

- Would require a cleanup setup for archives that gets stored on s3

Solution

After comparing all our options, we decided to go with a serverless solution because that gave us scalability & performance at a lower cost. That also meant no impact on our main application stack. Our existing expertise in Serverless offerings from AWS also helped us to make our minds.

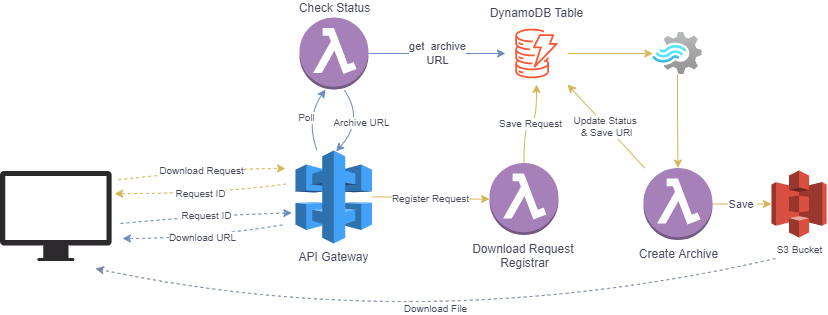

Here’s how the solution worked.

- Frontend would hit an API endpoint with filenames that are to be downloaded. API would be AWS API Gateway Endpoint.

- It would trigger a lambda function which would save the request in a DynamoDB table and return the “Download Request ID” back to the frontend.

- As soon as a new record hits the DynamoDB table, a new lambda function would trigger. We used DynamoDB Streams as triggers for this lambda function.

- This function would then create an archive using the filenames received in the event and save the archive back to s3.

- Change the request status to complete in the DynamoDB table along with a downloadable URL of the archive.

- Frontend would keep hitting a polling URL with “Download Request ID” it got in the 2nd step until it gets the download URL as the response. Once it has the URL, it would download the file from S3.

Result

The solution has been in production for quite some time and had helped users download more than 100K files till now. It’s working flawlessly and we have rarely received any complaints about its performance and download speed. And the best part! It literally cost us pennies. The number of download requests we receive falls well under the free Tiers of the services we use.

Not to mention that we also configured an S3 Life Cycle rule to automatically delete these archives after a certain period so that they don’t cost us.